Is AI Evolving Faster Than Expected?

Every single week, new updates are coming to language models. With that, the use of current models such as GPT-4, Gemini 1.5, LlaMa 3, and Claude 3.5 is increasing in our daily lives. So, while these developments are taking place, are we progressing too quickly or at the expected pace?

If you came here only for the answer, the answer is a big no. This was all expected, and there is more to happen in the near future.

Brief Introduction

On June 6th, Leopold Aschenbrenner published situational-awareness.ai, dedicating his work to Ilya. This post was inspired by his writings and other current sources, and it was prepared without going into technical details. (at least i tried my best)

Firstly, the research paper of Aschenbrenner’s reminds me situational leadership from its name. Although these two are completely different realities, examining them together can reveal valuable information. Let me briefly summarize what came into my mind. Situational Leadership requires directing, coaching, supporting, and delegating in a team. While we are getting more aware about AI, adopting a situational leadership approach becomes essential. In this situation, the team turns into a person. We need to guide and educate ourselves for better understand AI, support and delegate our responsibilities to effectively utilize AI. This mindset allows us to maximize the benefits of AI.

What Happened?

Today, we can effortlessly accomplish daily tasks through the AI models such as coding, writing essays, solving math problems, planning travel or random meal recipes etc. It only happened within a few years and the fact that it was accurately predicted by researchers. You might think that “How could they predict this huge jump in capability of AI from GPT-2 to GPT-4 in 2 years?”

I researched the past and future of artificial intelligence and discovered many things I should have known before. The chart below shows that it was already known that 2023 would be our breakpoint for AI. If you look closely, you will notice one more significant date: 2045, when singularity might happen. So, nothing to be surprised about here.

What Will Happen?

Back in 2015, Sutskever stated that “the models simply want to learn. You need to understand this. The models just want to learn.” This statement shows that the models only require data and instructions to work on their behalf. It implies that AI competence will increase exponentially day by day, but this is not a fact that can happen alone. The most important factor in the acceleration of developments in AI in recent years is the exponential increase in computing power and the algorithmic efficiency.

Aschenbrenner puts his foresight at that point, predicting that by 2027, models will be able to do the work of an AI researcher or engineer. We will see a new significant leap with 100,000 times more effective computation, it will not be like the others even if we have GPT-4o. As someone who thinks AI with Human instead of AI vs Human, this development will take over most unskilled basic jobs.

Especially after Apple’s announcement to integrate ChatGPT into iOS, we will be dragged into a life no different from a Black Mirror episode, where AI becomes a complementary part of everyday life.

Trends and Capabilities of Artificial Intelligence

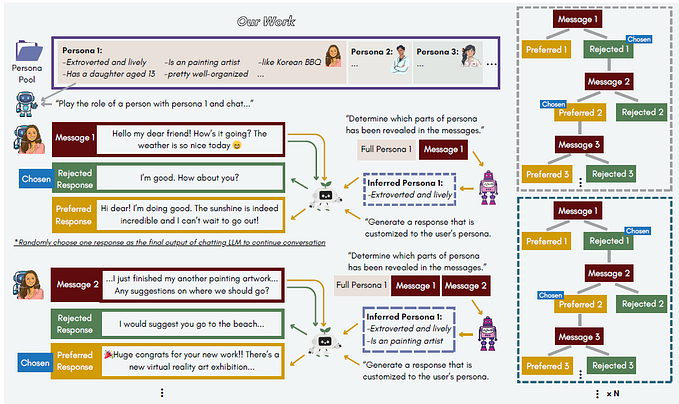

The rapid enhancement of deep learning demonstrates to us what AI is capable of doing. Two different graphs showing the difference over the years. The first graph points out the capabilities of AI by 2020, it only enables reading, image, writing and speech recognition. Until then, we did not see these developments as a GPT interface, but assistants like Alexa and Siri were doing similar tasks. It would be unfair to compare GPT’s entry into our lives, its accessibility and the areas it covers with Alexa.

Let me come to the graphs below, considering the benchmarks, it shows that things that took months and years to learn can now be learned almost instantly. This shift allows us to focus on how to effectively utilize these. Therefore, many new AI-based apps are entering our lives. Instant language translators, editing apps that replaced Photoshop, chat apps, and many new areas of use.

While these enhancements are remarkable for many industries, it also suggests that our benchmarks — the abilities of humanity — may be running out soon which may lead us to the concept of singularity.

How is this possible?

Computational Power

The first computers, such as the ABC and ENIAC, were as large as a room. Until the early 2000s, technological progress was made in small steps by companies. Later, with the rise of computational power, these companies began building more powerful machines.

The below chart shows enhancements after 2010, with the majority occurring after 2017. This trend keeps going in parallel with the advancements in AI capabilities, indicating that we have shifted and are still shifting towards integrating more advanced AI functionalities. In the past, a computer could fit in a single room, but now an entire algorithm runs in a warehouse full of computers.

This growth takes its roots from Moore’s Law. Gordon Moore, co-founder of Intel, which states that the number of transistors on microchip doubles approximately every two years, the cost of the computing is halved. If we look at the real time example, recent research found that GPT-4’s training process utilized approximately 3,000 to 10,000 times more computational power compared to GPT-2. This data also proves that the doubling in years.

Algorithms

Not only have compute investments taken AI here, but also the algorithmic progress drove an important place in it. Aschenbrenner found that the efficiency of algorithmic progress improved nearly 1,000 times within less than two years, using high school competition math as a benchmark. These algorithmic improvements result with the compute multipliers. For example, imagine that we have 10,000 tokens to train and it costs $1,000 to handle. With the help of new and better algorithms, the same performance can be achievable with 10 times less training compute. Same compute, better algorithm and less cost which means the improvements follow an exponential rather than linear trend.

Recent research evaluates the relative training compute of each LLMs and the chart below shows that Megatron-LM, GPT-2 and Gopher compute performance over time. To achieve the same performance for each LLM, it requires 5 to 100 times less compute per year in 2023 and their average halving time of 8 months.

In The End

Three key elements — data, algorithms, and compute — work together to drive AI development. Human capital combines this interaction, applying domain knowledge, creativity, and technical skills to enhance artificial intelligence. This holistic approach ensures that AI can achieve better accuracy, efficiency, and applicability in solving real-world problems.

In addition to what we experience remains at the limit of expectations, we are also in an environment where future scenarios are predicted. Our aim is to look for ways to adapt to innovations of AI faster rather than being surprised by them.